The history of medicine, as distinct from surgery, took giant steps in the 19th century when bacteria were identified and then linked to human illness. Surgery had been able to treat battle wounds for centuries although bacteriology would also lead to major advances there. For the medical doctor, however, there was little that could be accomplished for the sick prior to Louis Pasteur. Medicine in that era was concerned with diagnosis and prognosis, a significant benefit if accurate, which it sometimes was. Treatment was more harmful than effective.

William Withering had introduced the first effective medicine in 1785.

Paracelsus had discovered that mercury would inhibit syphilis in the 14th century but that was the only previous effective use of medicine. It was said, in an era when syphilis was endemic, that “A night with Venus leads to a lifetime with Mercury” as the treatment required continuous use to be effective. There would be no other treatment for syphilis until the 20th century.

Edward Jenner discovered the ability of cowpox infection to prevent the far more dangerous infection of smallpox. These few pioneers were bright supernovae in a dark universe of ignorance. Infectious diseases were the most common cause of death prior to this century.

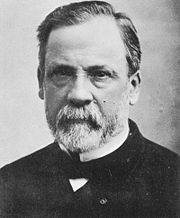

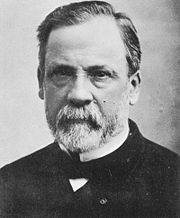

Louis Pasteur was a chemist who first recognized that living organisms were responsible for such phenomena as fermentation of wine and souring of milk. His research resulted in an age of bacteriology for the next 50 years.

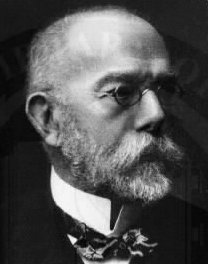

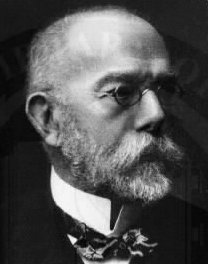

Robert Koch was a German physician who learned to grow bacteria in cultures that could be purified and subcultured. He established the principles of infection by a specific organism. Pasteur grew bacteria in liquid medium that did not lend itself to purifying cultures. Koch began the use of solid medium and his assistant invented the Petri dish. Koch also discovered the organism that causes cholera, which cannot be grown in artificial culture. It lives only in the human intestine and is transmitted in water supplies contaminated by fecal material.

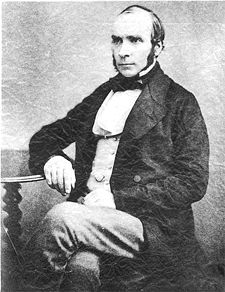

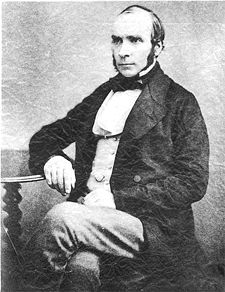

John Snow the founder of epidemiology (along with Florence Nightingale), had identified the connection of cholera to water supplies in 1859 but he could not go further because bacteria had not yet been discovered.

The microscope, especially after improvements by Joseph Jackson Lister allowed these men to see the bacteria in wounds, diseased organs and rotting flesh. Lister’s son would add the first great step in treating these diseases.

Joseph Lister, the son, was an orthopedic surgeon who learned to prevent infection by applying carbolic acid to compound fracture wounds after the fracture had been reduced. Lister was still somewhat vague about the organisms he was treating because they were still poorly visualized. In fact, that lack of proof caused great resistance to his innovation.

In 1884, Hans Christian Gram discovered that some bacteria would stain blue with crystal violet and that this characteristic was related to other features of the organism. A powerful new tool was available to bacteriologists called Gram staining.

The era of the bacteriologist reached its pinnacle when Koch described the tuberculosis organism in 1882 , proving that “consumption” was an infection, and then Pasteur was able to prevent rabies with a vaccine. Unfortunately, Koch’s career ended with a bit of farce as he announced a cure for tuberculosis that was, in fact, no such thing. He fled with a girlfriend to Egypt proving there is nothing new under the sun. His other innovations survived.

Vaccines would dominate medicine until the discovery of antibiotics, first by Domagk, , when he discovered the sulfa drugs in 1937. A German physician, he was not permitted by Hitler to accept the Nobel Prize and was awarded the Prize after the war.

Even before Domagk’s discovery, in 1928, Alexander Fleming had discovered penicillin but did not follow up his discovery after a few tentative attempts at treatment.

Ten years later, Howard Florey, an Australia physician at Oxford, resumed study of penicillin with the result that infectious diseases caused by bacteria would recede into a secondary role in medicine. Other antibiotics were discovered and new ones continue to be synthesized. Cancer, and other degenerative diseases, became the most common causes of death.

The New Era

In 1977, a microbiologist named Carl Woese proposed a new kingdom of biology. It was called Archaea and he met considerable resistance at first. They are also called Extremeophiles as they were often found in extreme environments such as steam vents on the ocean floor, or in national park geysers, with temperatures at far above boiling. Bacteria and most other forms of life could not exist there because proteins denature at temperatures well below those found in these environments. However, it was soon found that these organisms are widely distributed and some are quite common, such as Methanococcus, which makes swamp gas by metabolizing rotting vegetation and producing methane gas. Some varieties are even found in the gut of cows.

The genomes of over 50 varieties have now been sequenced and similarities with higher life forms have been found, placing them between the bacteria and higher forms. They may well represent the first life forms and there is a possibility that similar organisms may be found on other planets. Since some of these organisms are capable of synthesizing carbon chains, like those in oil, the secret of the energy crisis may be found here. Some of them are capable of scrubbing CO2 from the exhaust of coal burning power plants. Some are capable of making methane (natural gas) from coal without burning at all. This may even be possible without digging up the coal. For example, it is now known that Archaea organisms are still making methane in abandoned coal mines. This creates danger for anyone entering these old mines but may provide a source of natural gas from residual coal that was left behind. In the future, it may be possible to inject coal deposits with the culture of Archaea and collect the gas without ever digging a mine or stripping surface layers above the coal.

The possibility of processing nuclear waste should not be ignored. The organism survives in a high radiation environment and other Archaea are capable of generating electricity in fuel cells

The future is with biotechnology and the limits are not yet visible. We are entering the second Age of Bacteriology